The world of artificial intelligence (AI) took a massive leap forward with the emergence of ChatGPT in November 2022. It has been a game-changer across industries including healthcare, retail, financial services, manufacturing and others.

Organisations are no longer asking whether to use AI but how to adopt it to reduce operational costs, increase efficiency, grow revenue or improve customer experience.

While AI is transformative, it raises profound privacy concerns

The challenges

While the emergence of AI is transformative, this powerful tool is not without its challenges, notably the profound privacy concerns it raises. These include:

Data collection. AI models rely on extensive datasets to learn and mimic human-like behaviour or provide valuable insights. The source of these datasets often includes diverse content from the internet, which may inadvertently contain personal or special category data, or sometimes even data exposed in a breach. As AI models evolve, their training datasets are likely to grow larger, increasing the risk of personal and special category data being included.

Algorithmic bias and discrimination. AI systems are trained on vast amounts of existing data, which may inherently incorporate biases. If left unchecked, biased algorithms may inadvertently perpetuate biases and lead to decisions that could negatively impact certain groups of people, even though the organisation may have no intention of discriminating.

To satisfy data subject requests, an AI model may need to be retrained or taken down

Data subject requests. Once AI systems are trained and deployed, responding to certain data subject requests becomes increasingly difficult. To satisfy requests by data subjects for rectification of data or deletion of their data used in training the AI system, organisations may need to retrain the model, take it down or find an alternative resolution.

Transparency and informed consent. As AI systems become commonplace, users will increasingly interact with them unknowingly, including instances where they are affected by automated decision-making. Additionally, the consent provided by these individuals may not be valid if they are not aware of how their data will be used.

Data breaches. Multiple high-profile data breaches have occurred, including one at OpenAI (the company behind ChatGPT). Organisations must ensure that personal and special category data is stored and processed securely when collecting and using extensive datasets to train AI systems.

AI-based phishing attempts and scams exploit personal data such as audio clips and deep-fake content

Regulatory requirements and industry standards. Organisations must demonstrate compliance with existing and upcoming regulations and standards, including the General Data Protection Regulation (GDPR), the upcoming EU AI Act, ISO 22989, and the National Institute of Standards and Technology’s AI risk management framework, in order to maintain customer trust and meet procurement standards.

Misuse of personal data in AI-enabled cyberattacks. Malicious actors have begun using AI to create more sophisticated and effective phishing attempts and other scams, exploiting personal data such as audio clips and deep-fake content.

Inaccurate responses. It is common for generative AI programs to respond on the basis of probabilities identified within their training datasets instead of actual, accurate data points. This can result in inaccurate responses and may cause issues if users do not verify the authenticity of the system’s responses.

Automating high-risk processes will require controls such as ‘a human in the loop’

Best practice

To address these concerns, organisations should consider the following best practices:

AI governance. A system of rules, processes, structures (including an AI committee), frameworks and technological tools should be set up and documented to ensure ethical and responsible development and use of AI systems. The teams involved should be interdisciplinary, including those who work in AI development, legal, privacy, information security, customer success and others.

Privacy by design. This principle is the foundation of responsible AI, and data protection and privacy considerations must be implemented throughout the development lifecycle. Some AI systems have a black box-like nature that makes it harder to detect and fix ethical, privacy and regulatory issues after deployment, increasing the need for privacy by design. Processes that pose too high a risk to move towards automation through AI will require controls such as ‘a human in the loop’’.

Organisations may not use publicly available data to train AI systems without a valid lawful basis

Transparency. Users must be provided with clear and transparent communication which includes the confirmation that AI systems are used to process their data, how their data is collected and processed, how long it will be stored, and their rights, to help ensure informed consent and build trust.

Fairness. Regular testing and audits of AI systems, including the automated decision-making algorithm, will guard against bias or discrimination against users. In some circumstances, it may be necessary to fine-tune learning models and incorporate ethical guidelines into AI training.

Data management. This includes ensuring the data ingested by the AI system during training is lawfully obtained and of high quality, and that rigorous vetting and anonymisation have been performed. Technologies such as pseudonymisation or data aggregation should be implemented to ensure compliance with privacy principles of data minimisation and retention. Up-to-date records of processing activities should also be maintained to ensure data is managed effectively throughout its lifecycle.

Organisations may not use publicly available data to train AI systems without a valid lawful basis. The Italian data protection authority temporarily banned ChatGPT out of concern that there was no legal basis for its use of publicly available datasets.

Organisations need to ensure AI-driven innovation aligns with the values of privacy, ethics and user trust

Risk management, compliance and information security. A risk-based approach, including a data protection impact assessment, should be implemented to assess the level of risk involved before AI systems are deployed.The organisation should also sign off on the risk levels, controls and mitigations.

AI compliance monitoring should be incorporated into the organisation’s regulatory compliance programme or its privacy programme. The wider organisational infosecurity programme should also include AI systems and their underlying data to prevent data breaches and other malicious attacks. Technical and organisational measures such as encryption, data masking, password management, access controls and network security should be implemented.

Employee training. As AI is a new technology, employees must be trained periodically in using it responsibly. Training should include the privacy impact of AI systems, compliance with data protection regulations while using AI, misuse of personal data in AI-enabled cyberattacks and how to guard against it, and data protection best practices.

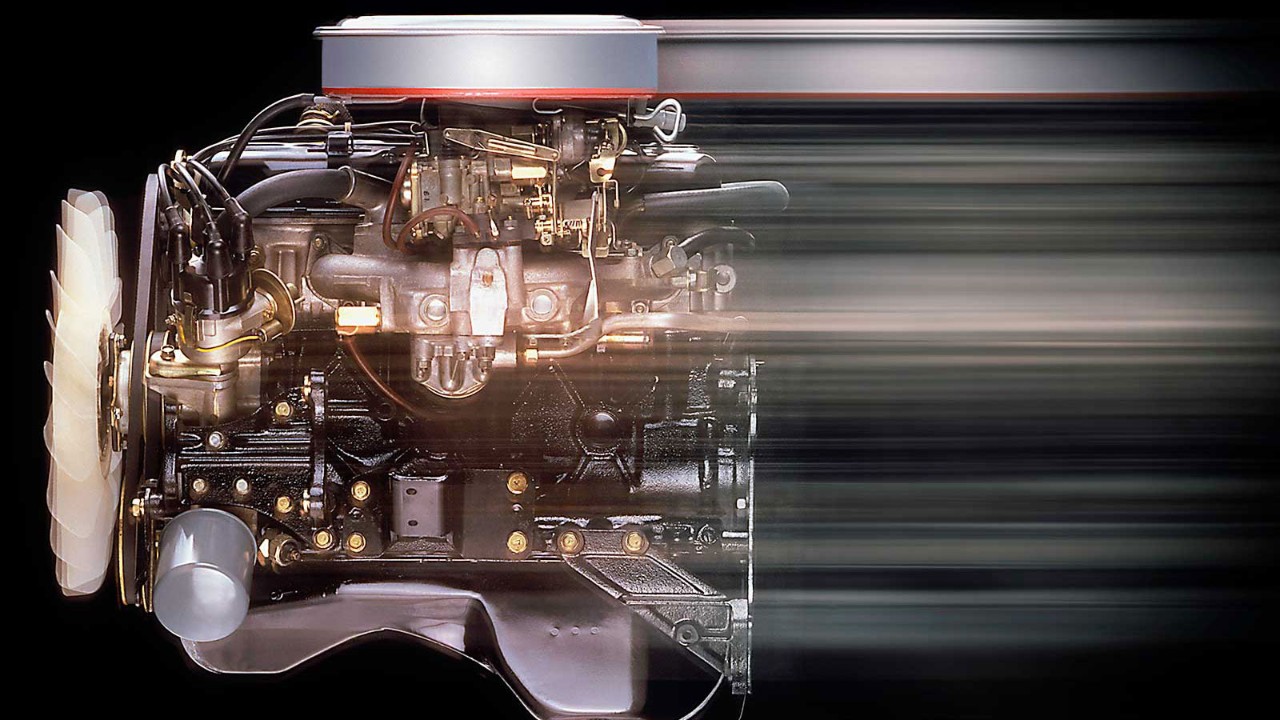

The advent of AI may be compared to the invention of the combustion engine. While organisations can move faster with AI, they will also require stronger brakes. Organisations will be responsible for ensuring that AI-driven innovation aligns with the values of privacy, ethics and user trust.

More information

Read the Department of Public Expenditure, NDP Delivery and Reform’s Interim Guidelines for Use of AI